As High-performance computing (HPC) workloads become increasingly complex, generative artificial intelligence (AI) is being progressively integrated into modern systems, thereby driving the demand for advanced memory solutions. To meet these evolving requirements, the industry is developing next-generation memory architectures that maximize bandwidth, minimize latency, and enhance power efficiency. Technology advances in DRAM, LPDDR, and specialized memory solutions are redefining computing performance, with AI-optimized memory playing a pivotal role in driving efficiency and scalability. Winbond's CUBE memory exemplifies this progress, offering a high-bandwidth, low-power solution to support AI-driven workloads. This article examines the latest breakthroughs in memory technology, the growing impact of AI applications, and Winbond's strategic moves to address the market's evolving needs.

Advanced Memory Architectures and Performance Scaling

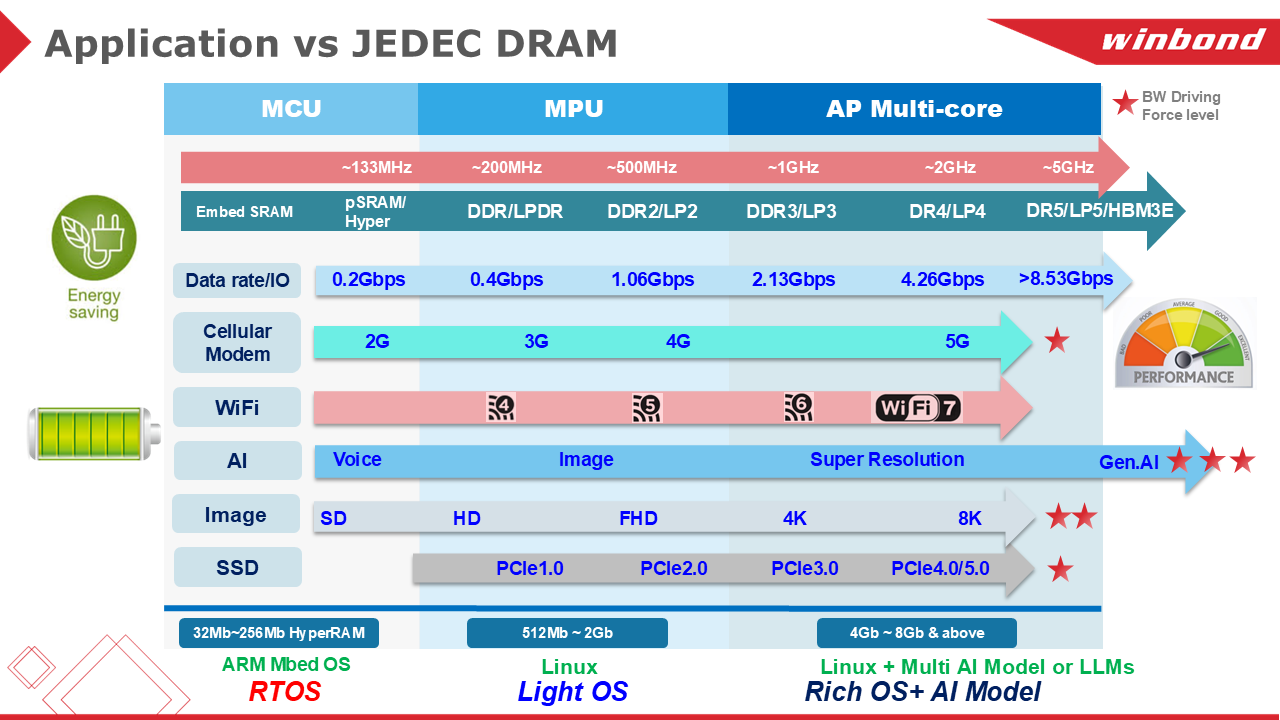

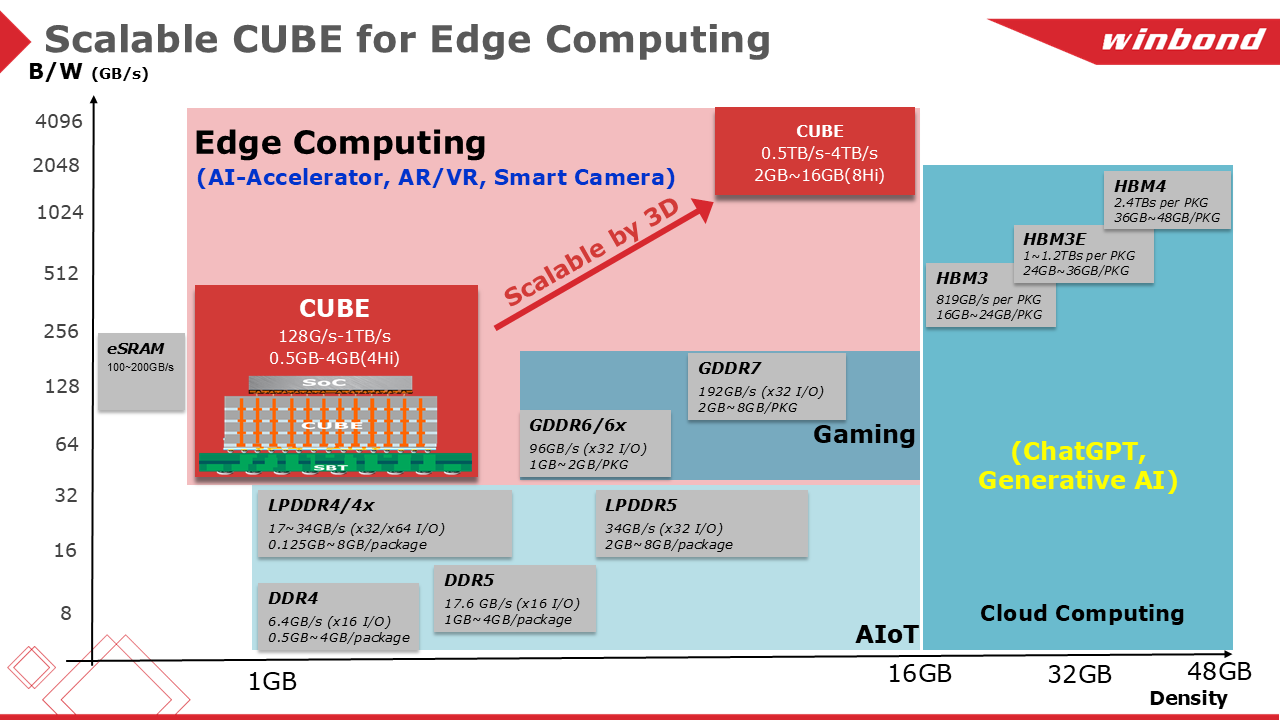

Memory technology is advancing to meet the stringent performance requirements of AI, AIoT, and 5G systems. The industry is witnessing a paradigm shift with the widespread adoption of DDR5 and HBM3E, offering higher bandwidth and improved energy efficiency. DDR5, with a per-pin data rate of up to 6.4 Gbps, delivers 51.2 GB/s per module, nearly doubling DDR4's performance while reducing the voltage from 1.2V to 1.1V for improved power efficiency. HBM3E extends bandwidth scaling, exceeding 1.2 TB/s per stack, making it a compelling solution for data-intensive AI training models, although it is impractical for mobile and edge deployments due to excessive power requirements.

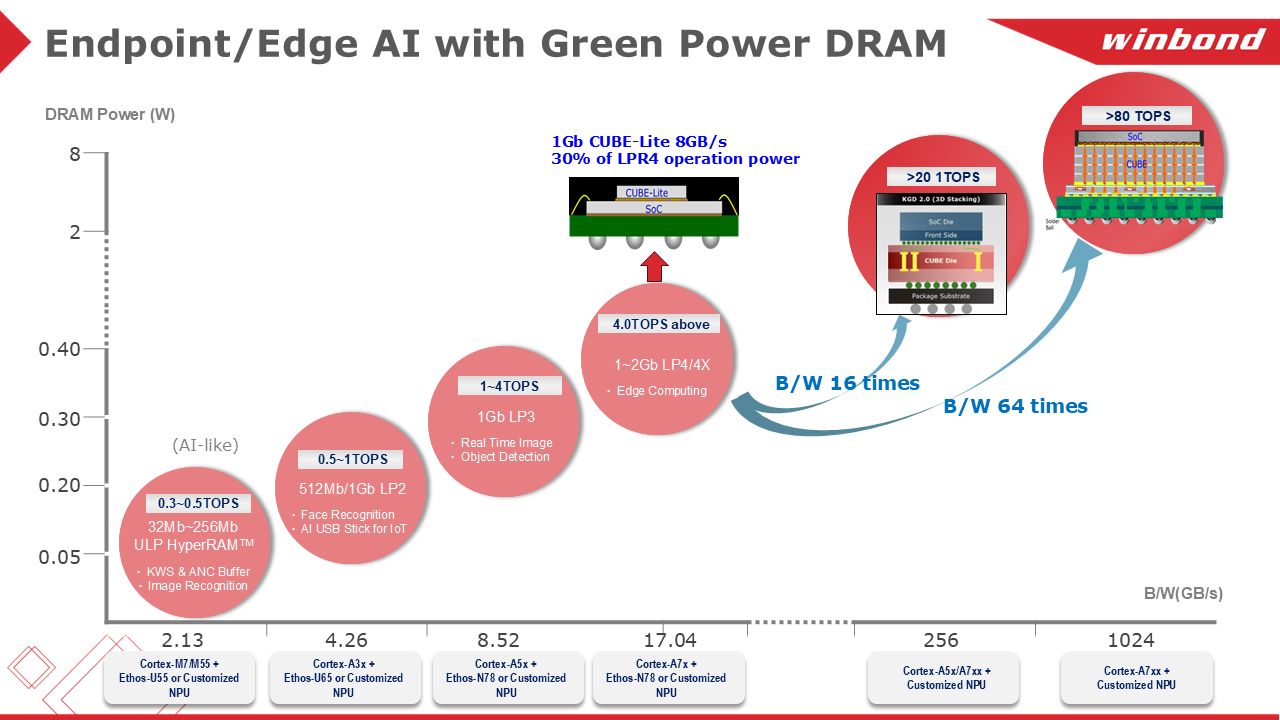

With LPDDR6 projected to exceed 150 GB/s by 2026, low-power DRAM is evolving towards higher throughput and energy efficiency, addressing the challenges of AI smartphones and embedded AI accelerators. Winbond is actively developing small-capacity DDR5 and LPDDR4 solutions optimized for power-sensitive applications while pioneering CUBE memory, which achieves over 1 TB/s bandwidth with a significant reduction in thermal dissipation. With anticipated capacity scaling up to 8 GB per set or even higher, such as 4Hi WoW, based on one reticle size, which can achieve >70GB density and bandwidth 40TB/s, CUBE is positioned as a superior alternative to traditional DRAM architectures for AI-driven edge computing.

In addition, the CUBE sub-series, CUBE-Lite, offers bandwidth ranging from 8 to 16GB/s (equivalent to LPDDR4x x16/x32) while operating at only 30% of the power consumption of LPDDR4x. Without requiring an LPDDR4 PHY, SoCs only need to integrate the CUBE-Lite controller to achieve bandwidth performance on par with full-speed LPDDR4x. This not only eliminates the high cost of PHY licensing but also allows the use of mature process nodes such as 28nm or even 40nm, achieving performance levels only on a 12nm node.

This architecture is particularly suitable for AI-SoCs or AI-MCUs integrated with NPUs, enabling battery-powered TinyML edge devices. Combined with Micro Linux operating systems and AI model execution, it can be applied to low-power AI-ISP edge scenarios such as IP cameras, AI glasses, and wearable devices, effectively achieving both system power optimization and chip area reduction.

Besides that, the CUBE subset, CUBE-Lite, can offer 8GB/s (equivalent to LPDDR4x x16 bandwidth) while operating at 30% of the power consumption of LPDDR4x. Furthermore, SoCs without LPDDR4 PHY and only CUBE-light controllers can achieve smaller die sizes and improved system power efficiency. The architecture is ideal for AI-SoC (MCUs+MPUs+NPUs), and TinyML Endpoint AI devices designed for battery operation. The system OS is Micro Linux combined with an AI model for AI-SoCs. The end applications include AI-ISP for IP-cams, AI glasses, and wearable devices.

Memory Bottlenecks in Generative AI Deployment

The exponential growth of generative AI models has created unprecedented constraints on memory bandwidth and latency. AI workloads, particularly those relying on transformer-based architectures, require extensive computational throughput and high-speed data retrieval.

For instance, deploying LLamA2 7B in INT8 mode requires at least 7 GB of DRAM or 3.5 GB in INT4 mode, which highlights the limitations of conventional mobile memory capacities. Current AI smartphones utilizing LPDDR5 (68 GB/s bandwidth) face significant bottlenecks, necessitating a transition to LPDDR6. However, interim solutions are required to bridge the bandwidth gap until LPDDR6 commercialization.

At the system level, AI edge applications in robotics, autonomous vehicles, and smart sensors impose additional constraints on power efficiency and heat dissipation. While JEDEC standards continue to evolve toward DDR6 and HBM4 to improve bandwidth utilization, custom memory architectures such as Winbond's CUBE provide scalable, high-performance alternatives that align with AI SoC requirements. CUBE integrates HBM-level bandwidth with sub-10W power consumption, making it a viable option for AI inference tasks at the edge.

Thermal Management and Energy Efficiency Constraints

Deploying large-scale AI models on end devices introduces significant thermal management and energy efficiency challenges. AI-driven workloads inherently consume substantial power, generating excessive heat that can degrade system stability and performance.

- On-Device Memory Expansion: Mobile devices must integrate higher-capacity memory solutions to minimize reliance on cloud-based AI processing and reduce latency. Traditional DRAM scaling approaches physical limits, necessitating hybrid architectures integrating high-bandwidth and low-power memory.

- HBM3E vs CUBE for AI SoCs: While HBM3E achieves high throughput, its power requirements exceed 30W per stack, making it unsuitable for mobile and edge applications. Winbond's CUBE memory can serve as an alternative LLC (Last Level Cache), reducing on-chip SDRAM dependency while maintaining high-speed data access. The shift toward sub-7nm logic processes exacerbates SDRAM scaling limitations, emphasizing the need for next-generation cache solutions.

- Thermal Optimization Strategies: As AI processing generates heat loads exceeding 15W per chip, effective power distribution and dissipation mechanisms are critical. Custom DRAM solutions that optimize refresh cycles and employ TSV-based packaging techniques, such as those used in CUBE, contribute to power-efficient AI execution in compact form factors.

DDR5 and DDR6: Accelerating AI Compute Performance

The evolution of DDR5 and DDR6 represents a significant inflexion point in AI system architecture, delivering enhanced memory bandwidth, lower latency, and greater scalability.

DDR5, with 8-bank group architecture and on-die ECC, provides superior data integrity and efficiency, making it well-suited for AI-enhanced PCs and high-performance laptops. With an effective peak transfer rate of 51.2 GB/s per module, DDR5 enables real-time AI inference, seamless multitasking, and high-speed data processing. DDR6, still in development, is expected to introduce bandwidth exceeding 200 GB/s per module, a 20% reduction in power consumption, and optimized AI accelerator support, further pushing AI compute capabilities to new limits.

Winbond's Strategic Leadership in AI Memory Development

Winbond's market strategy drives innovation in custom DRAM architectures tailored for AI-centric workloads and embedded processing applications. Key initiatives include:

- CUBE as an AI-Optimized Memory Solution: By leveraging Through-Silicon Via (TSV) interconnects, CUBE integrates high-bandwidth memory characteristics with a low-power profile, making it ideal for AI SoCs in mobile and edge deployments.

- Collaboration with OSAT Partners: Winbond is actively working with Outsourced Semiconductor Assembly and Test (OSAT) providers to enhance integration with next-generation AI hardware, refining memory packaging solutions to improve efficiency and reduce latency.

- Future-Ready DRAM Innovations: With a roadmap focused on AI-tuned DRAM solutions, specialized cache memory designs, and optimized LPDDR architectures, Winbond is poised to address evolving AI application demands in HPC, robotics, and real-time AI processing.

Conclusion

The convergence of AI-driven workloads, performance scaling constraints, and the need for power-efficient memory solutions is shaping the transformation of the memory market. Generative AI continues to accelerate the demand for low-latency, high-bandwidth memory architectures, leading to innovation across DRAM and custom memory solutions.

Winbond's leadership in CUBE memory and DDR5/LPDDR advancements positions it as a key enabler of next-generation AI computing. As AI models scale in complexity, the need for optimized, power-efficient memory architectures will become increasingly critical. Winbond's commitment to technological innovation ensures it remains at the cutting edge of AI memory evolution, bridging the gap between high-performance computing and sustainable, scalable memory solutions.